Image Hashing

Simple, Fast, and Scalable Reverse Image Search Using Perceptual Hashes and DynamoDB

How we built our first iteration of content matching at Canva.

Canva hosts a huge collection of ever-growing, user media that needs to be stored and managed properly. The diversity of this media poses challenges around the moderation and reduction of unnecessary duplicate content. To cater to this, one tool we use is perceptual hashing with an internally built reverse image search system.

This blog post describes the design and process we went through to build such a system to the scale of Canva.

Hashing

Hashing is one simple way of implementing a reverse image search. A hash function is an algorithm that maps data to unique fixed-sized values called hash keys, which are represented as strings. Common hash algorithms include MD5(opens in a new tab or window) and SHA(opens in a new tab or window).

The ability to map any file into unique hash keys is useful for matching duplicate content but is not very viable in the case of images.

For instance, you could implement a simple reverse image search in an image library using the image file hash key as a primary key in a database.

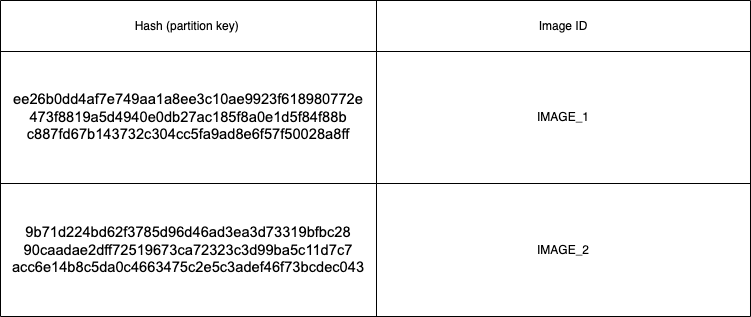

In the example below, we have two visually duplicated images (IMAGE_1 and IMAGE_2) whose SHA512 hash keys are the primary keys in a DynamoDB table, and their image IDs are the secondary keys.

Even though the images are visually duplicated, it is evident that their hash keys can be totally different, even if there is a single pixel of difference.

In an image library, multiple images are visually similar but can have minor quality or metadata changes. All of these images will have different hash keys. Hence, a reverse image search using simple hashing is only useful in the context of perfectly duplicated images.

Perceptual hashing

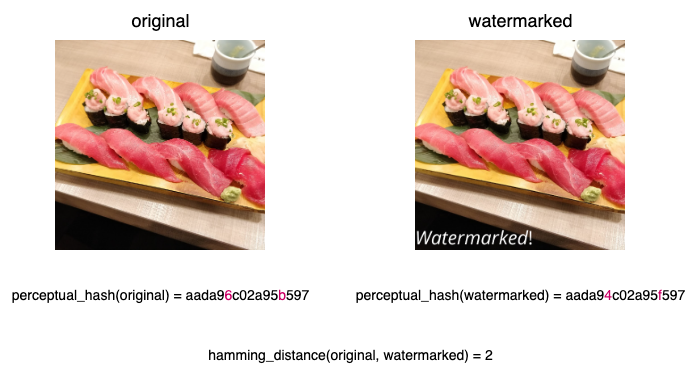

While cryptographic hashes like SHA512 create hashes of raw bytes, perceptual hashes are created based on the actual pixels of an image. Perceptual hashes change along with the visual change in the images. Hence, a reverse image search using perceptual hashing can be done by calculating the Hamming distance(opens in a new tab or window) between the two hashes.

Perceptual hashing algorithms can use various transformations and some are more effective for matching different image types like photos or vectors. For this blog post, we'll use as an example the pHash(opens in a new tab or window) algorithm since it produces shorter hashes that are easier to use in examples.

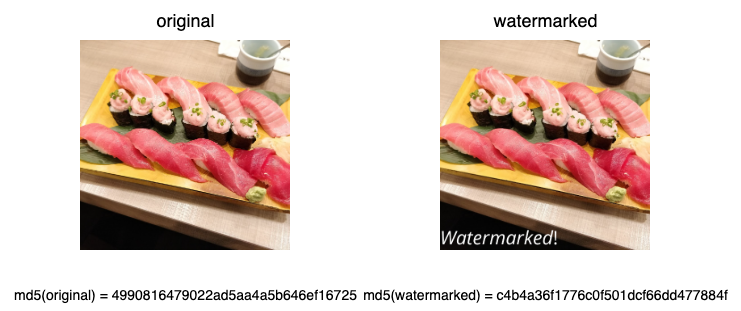

The original image above is modified with a watermark. Since it's a minimal modification, there is only a small Hamming distance of 2 between them. With more changes, there would be a larger Hamming distance. If there were no modifications, their hashes would have a Hamming distance of 0. Perceptual hashes and Hamming distances can be used to build a system that can find modified images.

Matching perceptual hashes

A perceptual hash matching system can use a hash and a Hamming distance as input. The system output would be all the stored hashes within that Hamming distance. The previous example cannot be used to create this system as it only supported matching if hashes are equal. Allowing this query type needs a different data structure. In addition, the system should have the right level of precision and recall. The ideal is to have higher precision and avoid false negatives.

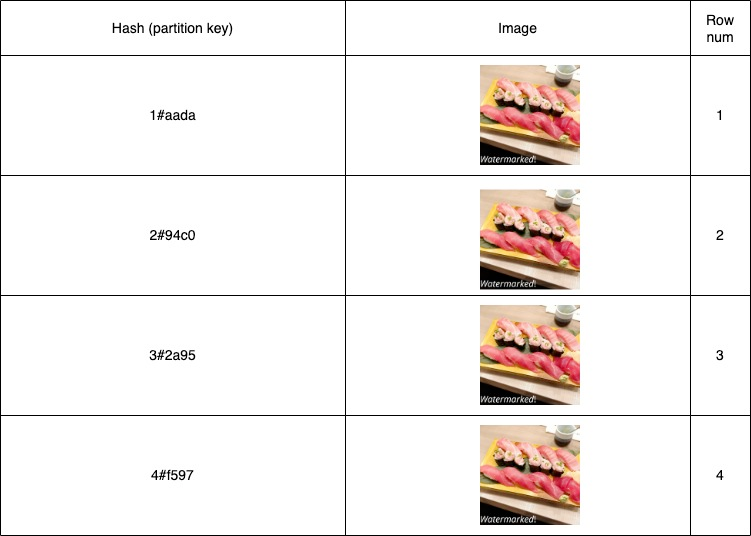

To do this we implemented an algorithm called multi-index hashing (Norouzi, 2012(opens in a new tab or window)) on top of DynamoDB tables. The algorithm involves splitting the query hash and stored hashes into segments (more on this later). This solution was chosen because it is able to guarantee finding hashes for a given Hamming distance at high scale. Here's an example of what the entries in the database could look like for a perceptual hash:

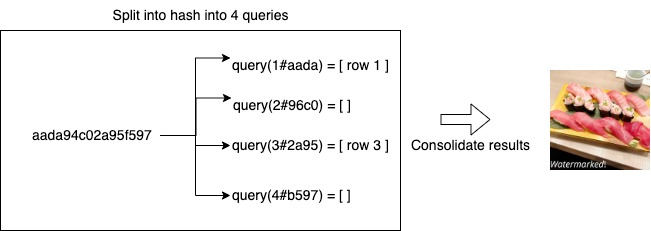

The hash is split into 4 parts, with each key prefixed with the index of that part. The same split is done on the image at query time to compare. The image id is the sort key for uniqueness between duplicates.

With the four hashes, four separate DynamoDB queries are run. Then, a consolidation step is run on the results to remove duplicates and filter out hashes with a Hamming distance higher than the threshold.

Insights

This solution can find similar hashes with the runtime of a DynamoDB GetItem query. However, its effectiveness depends on the hash split count and the nature of the images. In particular, the hash split count was one of the critical decisions that had to be made and was why the implementation was unusable at rollout.

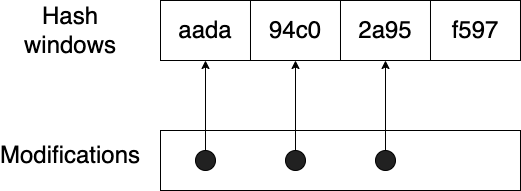

In the above example, the hash is split into 4 parts. With a 4 split, it is guaranteed that all results with a Hamming distance of 3 or less can be found. This is due to the pigeonhole principle(opens in a new tab or window). For example, if there are n slots that the hash is split into, and n — 1 character changes to distribute, it is guaranteed that at least one of the slots has no characters changed.

This is how there is a guarantee of finding all results within a Hamming distance threshold. Naturally, one might pick a high slot count for supporting as large a range as possible. However, increasing this number had two significant implications for the results:

- The result set increased, and

- The number of matches with high Hamming distances increased.

Immediately after rollout, there were issues that caused the system to be unusable. Queries took minutes to run, used 20x more read capacity than expected, and returned very few results. DynamoDB queries were returning an excessive amount of results that were all filtered out in the consolidation step.

Investigating the queries and their results revealed two interesting insights:

- For one, it was common for users to upload duplicates of the same image. This meant the uniqueness distribution for images was not as unique as initially expected, leading to more results per search.

- The other problem involved the hashing algorithm. The

perceptual hash being used produces fairly uniform hashes for

non-complex images like single-colored shape vectors (i.e.,

AAAAAAAA). This meant that many vectors in our database had the same partition keys. If any query hash matched a slot with these uniform hashes, 100000s of vector hashes would be returned in the result set.

To fix these issues, two changes were made:

- First, the window count was reduced 4x to reduce the number of results that the system filtered. After each reduction, tests were done to ensure that no relevant image matches were lost.

- Then we chose to skip hashing low-complexity images.

These were easy to pick out because the hashes generally consisted of

a single character like

AAAAAAAA.

These changes reduced the number of rows returned from 100000s to 10s for all the previously problematic queries, fixing the issues that occurred on roll-out.

How well does it run?

Some of the highlights of the system are:

- Hashes for over 10 billion images in DynamoDB.

- Average query time of 40ms, p95 at 60ms.

- Our peak workload is in excess of 2000 queries per second, with no performance degradation at that utilization, which maps to ~10k DynamoDB RCU capacity.

- Catches watermarking, quality changes, and minor modifications.

Applications at Canva

We've already been leveraging the system for various use cases at Canva. One use case comes from the sheer amount of media that is stored which is comparable to most large media-based tech companies. This comes with a significant cost in the form of storage, with deduplication of bytes as a means to reduce this.

Since we now have an image hash table, we can analyze the number of unique hashes and determine the deduplication percentage of all our images. This can even be extended to identifying "similar" images as well, finding those instances of light modification.

For content moderation, a system operating on perceptual hashes gives us the ability to quickly act on known illegal, graphic, or dangerous media across our dataset. This means that we can action media takedowns at scale in a matter of seconds with tooling, rather than manual intervention.

What's next?

Perceptual hashing is only one tool in the toolkit for managing a large media footprint. Its functionality is only just being explored at Canva and we look forward to improving hit rates against other transforms such as images within images.

Acknowledgements

Huge thanks to everyone in the media platform team for helping to get this project through. Special thanks to:

- Jacky(opens in a new tab or window) for coaching and supporting me throughout the project

- Oliver(opens in a new tab or window) for helping with the implementation and for co-owning the service

Michael(opens in a new tab or window) and Raymond(opens in a new tab or window) for providing their security perspective and expertise.

Want to work on scaling media storage and access at Canva? Join us!(opens in a new tab or window)